So this was the last week of class in 148. we didn't cover any new material really, it was mostly just review. The one thing I will mention is what is called memoization. Memoization is basically a preventative measure to stop a function from doing unnecessary work. For example, when taking a factorial of a number, if you already know certain Fibonacci numbers up to a point, its a lot easier and efficient to take the already calculated Fibonacci numbers and add on to them, than it is to calculate the entire sequence once again. So, in memoization, we store calculated values inside a structure such as a list or dictionary, and when we call the function, we check this structure for pre-existing material we can use.

Anyways, as for final thoughts: I did enjoy 148 as a whole, and I have a new found love of recursion (though linked lists...not so much). I felt the work load was fair, and the course was manageable. Even if it wasn't required, it is definitely a course a would recommend people to take if they're interested in the subject matter. Good luck to all my classmates for the future!

CSC148H1S SLOG

Sunday, April 6, 2014

Sunday, March 30, 2014

Week 11 - Sorting and Efficiency

When coding, it is important to make code efficient; after all, who would willingly choose to use a slower code? While two algorithms may only differ by .001s over say, a list of 10 numbers, that difference between algorithms may grow exponentially over a list of hundreds of thousands of numbers.

Im not going to go over how the different algorithms sort, as that was my post for last week. This post is mainly going to discuss the efficiency of the sorting algorithms. So, all the algorithms I mentioned - Insertion, Quick, and Merge sort - all are capable of sorting a list of numbers. However, Quick and Merge sort are much more efficient choices. To show this, we can look at their worst run times, denoted by O(?). This is called big-O notation.

Insertion Sort

Insertion sort is O(n^2). This means that in the worst case, the runtime is proportional to the square of the size of the list. In insertion sort, the worst case would be a reverse sorted list, as each item would have to be moved to the back (left) of the sorted section.

Quick and Merge Sort

Quick and Merge Sort are denoted as O(nlogn). The logn represents the runtime of splitting a list in two pieces. The we choose log of n, as it is relative to how many splits of the list we perform. The n in the O(nlogn) is the efficiency of the action performed after the list is done splitting. For example, it is the runtime of the merge action in merge sort. Combining these two; the splitting and the merge, we get a total runtime of O(nlogn).

This graph shows us various big-O runtimes. As we can see, over large sets of data, O(logn) takes less time than O(n^2). We can then conclude that Merge and Quick sort are more efficient than insertion sort, and thus in applications of sorting, we should use merge and quick sort.

Theoretically, the best possible sorting algorithm is O(1). that is to say that no matter the size of the data input, the runtime of the function is constant. Of course, an algorithm with this runtime has yet to be discovered over all sets of data.

It is important to note that big O notation only concern the worst-case. Under ideal conditions, or even average conditions, it could be possible for two algorithms to perform equally, only differing under big-O restrictions. You should therefore also consider average and best runtimes, in addition to the worst case, when deciding which algorithm to use.

Thats it for this entry, and my SLOG as well! Initially I had doubts about the usefulness of SLOGS, as did many of my friends, but as I have written them, I do have to admit that they have helped me better understand some of the concepts we have learned. I enjoyed the course, and wish everyone the best!

Im not going to go over how the different algorithms sort, as that was my post for last week. This post is mainly going to discuss the efficiency of the sorting algorithms. So, all the algorithms I mentioned - Insertion, Quick, and Merge sort - all are capable of sorting a list of numbers. However, Quick and Merge sort are much more efficient choices. To show this, we can look at their worst run times, denoted by O(?). This is called big-O notation.

Insertion Sort

Insertion sort is O(n^2). This means that in the worst case, the runtime is proportional to the square of the size of the list. In insertion sort, the worst case would be a reverse sorted list, as each item would have to be moved to the back (left) of the sorted section.

Quick and Merge Sort

Quick and Merge Sort are denoted as O(nlogn). The logn represents the runtime of splitting a list in two pieces. The we choose log of n, as it is relative to how many splits of the list we perform. The n in the O(nlogn) is the efficiency of the action performed after the list is done splitting. For example, it is the runtime of the merge action in merge sort. Combining these two; the splitting and the merge, we get a total runtime of O(nlogn).

This graph shows us various big-O runtimes. As we can see, over large sets of data, O(logn) takes less time than O(n^2). We can then conclude that Merge and Quick sort are more efficient than insertion sort, and thus in applications of sorting, we should use merge and quick sort.

Theoretically, the best possible sorting algorithm is O(1). that is to say that no matter the size of the data input, the runtime of the function is constant. Of course, an algorithm with this runtime has yet to be discovered over all sets of data.

It is important to note that big O notation only concern the worst-case. Under ideal conditions, or even average conditions, it could be possible for two algorithms to perform equally, only differing under big-O restrictions. You should therefore also consider average and best runtimes, in addition to the worst case, when deciding which algorithm to use.

Thats it for this entry, and my SLOG as well! Initially I had doubts about the usefulness of SLOGS, as did many of my friends, but as I have written them, I do have to admit that they have helped me better understand some of the concepts we have learned. I enjoyed the course, and wish everyone the best!

Sunday, March 23, 2014

Week 10

This week was based on sorting, with 3 main types in mind.

Insertion Sort

Insertion sort is based around a key in the list. The key starts at the left end, moving one space after each iteration. The idea here is that items to the left of the key are already sorted. When the key moves an index, it takes the value at that index and places it at the appropriate place in the sorted list.

Quick Sort

Quick sort is the process of splitting a large list into smaller, manageable problems. We choose a randomized 'pivot' point. All items smaller than the pivot are placed in a new left list, and the items that are greater are placed in a new right list. We recursively call Quicksort on each of these smaller lists, and thus are left with a completely sorted list at the end.

Merge Sort

The idea of merge sort is to continually split a list in halves, calling merge sort on each halve. Once a list is turned into a product of single-item lists. Then these items are compared in the small halves are compared to each other, and concatenated into a large sorted list. This process continues on the growing lists, until all items are included. I'm presuming that wasn't very clear to some people, so here's a picture:

It is easier to think of in 2 stages: First, we recursively call merge sort to product single-item lists. Then, in each 'set' of single-item lists, we compare the values and produce a parent list. This continues until we have a sorted list containing all original items.

Insertion Sort

Insertion sort is based around a key in the list. The key starts at the left end, moving one space after each iteration. The idea here is that items to the left of the key are already sorted. When the key moves an index, it takes the value at that index and places it at the appropriate place in the sorted list.

Quick Sort

Quick sort is the process of splitting a large list into smaller, manageable problems. We choose a randomized 'pivot' point. All items smaller than the pivot are placed in a new left list, and the items that are greater are placed in a new right list. We recursively call Quicksort on each of these smaller lists, and thus are left with a completely sorted list at the end.

Merge Sort

The idea of merge sort is to continually split a list in halves, calling merge sort on each halve. Once a list is turned into a product of single-item lists. Then these items are compared in the small halves are compared to each other, and concatenated into a large sorted list. This process continues on the growing lists, until all items are included. I'm presuming that wasn't very clear to some people, so here's a picture:

It is easier to think of in 2 stages: First, we recursively call merge sort to product single-item lists. Then, in each 'set' of single-item lists, we compare the values and produce a parent list. This continues until we have a sorted list containing all original items.

Sunday, March 16, 2014

Week 9

Binary Search Trees (BST) were this weeks main topic. The different of these specialized Binary trees is a certain condition: Data in the left subtree is less than that in the root, which is in turn less than the data in the right subtree (left<root<right).

This type of tree holds a distinct advantage in search for specified values. Say for example, in reference to the picture, we are attempting to search for 18. in this case, once we look at the root 5 we can immediately come to the conclusion that 18 must be in the right subtree (as left<root<right). Essentially, we have cut our searching time in half, as we can neglect to look through the left subtree.

In terms of efficiency, binary search is type O(lg n), while a more linear search through all elements yields O(n). Over large sets of data, lgn < n, so we conclude it is more efficient.

Lab08

This weeks lab was personally, one of the more challenging ones. The (main)focus of this lab was to implement the method count_less in a BST class. We had to implement it recursive in 3 different coding styles, and then iteratively. For me, I tend to prefer a single style of coding and stick with it. So while one recursive solution came easily, the others took a little more thought. I can definitely see the advantage of knowing multiple ways of approaching a problem though, as compared to my more single-minded approach.

Exercise03

This exercise was very useful for me, as a way of better understanding trees. After doing parts a and b, I feel like a have a much stronger grasp on tree sorting, tree representations, and of course, I got practice on recursion. The surprising thing I found out of this was how often I seem to neglect base cases/simple cases. Especially in part B, while my code worked flawlessly for large data sets, it buckled under simple examples of None. Of course, I was able to fix it by the next auto test, but it opened my eyes as to not just testing for advanced circumstance, but also the simple (which I had previously assumed would obviously work).

This type of tree holds a distinct advantage in search for specified values. Say for example, in reference to the picture, we are attempting to search for 18. in this case, once we look at the root 5 we can immediately come to the conclusion that 18 must be in the right subtree (as left<root<right). Essentially, we have cut our searching time in half, as we can neglect to look through the left subtree.

In terms of efficiency, binary search is type O(lg n), while a more linear search through all elements yields O(n). Over large sets of data, lgn < n, so we conclude it is more efficient.

Lab08

This weeks lab was personally, one of the more challenging ones. The (main)focus of this lab was to implement the method count_less in a BST class. We had to implement it recursive in 3 different coding styles, and then iteratively. For me, I tend to prefer a single style of coding and stick with it. So while one recursive solution came easily, the others took a little more thought. I can definitely see the advantage of knowing multiple ways of approaching a problem though, as compared to my more single-minded approach.

Exercise03

This exercise was very useful for me, as a way of better understanding trees. After doing parts a and b, I feel like a have a much stronger grasp on tree sorting, tree representations, and of course, I got practice on recursion. The surprising thing I found out of this was how often I seem to neglect base cases/simple cases. Especially in part B, while my code worked flawlessly for large data sets, it buckled under simple examples of None. Of course, I was able to fix it by the next auto test, but it opened my eyes as to not just testing for advanced circumstance, but also the simple (which I had previously assumed would obviously work).

Sunday, March 9, 2014

Week 8

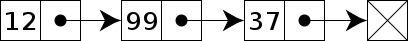

This week and last dealt with linked lists. Linked lists are similar to regular python lists, but with a few key differences. It is easier to view it pictorially:

Linked lists are named as such because each item is linked to the next. Each item has a head, such as 12 or 99, and a rest, which represent all the item after the value. At the end of the list is an empty node. By doing this, each rest will in itself refer to a small linked list; the rest of the value '12' would be a linked list of 99 and 37. As you may infer, since each rest is in itself a similar type of structure, we can view it recursively. But beyond that, I don't see what advantages we gain by using linked lists over regular lists; they seem harder to navigate and code, and have caused me nothing but trouble. I then looked over the internet for a use of linked lists, and found a few answers that gives an advantage to linked lists:

Linked lists are named as such because each item is linked to the next. Each item has a head, such as 12 or 99, and a rest, which represent all the item after the value. At the end of the list is an empty node. By doing this, each rest will in itself refer to a small linked list; the rest of the value '12' would be a linked list of 99 and 37. As you may infer, since each rest is in itself a similar type of structure, we can view it recursively. But beyond that, I don't see what advantages we gain by using linked lists over regular lists; they seem harder to navigate and code, and have caused me nothing but trouble. I then looked over the internet for a use of linked lists, and found a few answers that gives an advantage to linked lists:

- you want insertions/deletions with constant run-time

- splitting and joining linked lists is efficient

- linked lists share a tree-like structure, that may be useful in some situations

- linked lists are flexible to modification

Sunday, February 23, 2014

Week 7 - Recursion

Recursion is the application of calling a function inside itself to solve a set of similar problems. As an example:

def non_list(L):

'''(list) -> int

Return number of items in list that are not lists

>>>non_list([1,'a',[[3],[]]])

3

'''

count = 0

for i in L:

if isinstance(i,list):

count += non_list(i)

else:

count += 1

return count

In this example we are iterating over items of a list. In the even the item found is another list, we are able to call the same function, non_list, on the new list. This process repeats for all nested instances of lists, only counting non-list items. The recursive application in this function is how we are able to call non_list from inside itself, to solve the same problem for the inner lists. It is very useful in solving a set of similar problems, however I have not learned any of the applications outside of that yet (if they exist). It seems like recursion is restricted in what it can and cannot do, as to call itself it seemingly must be a similar problem.

That being said we have definitely taken advantage of recursion in 148. It has been used our first assignment, to assess and analyze trees, as well as in our lab of linkedlists. It was extremely advantageous and reduced the time needed to solve problems like those, so I can definitely see why it was learned. Initially, and from reading slogs I can see others often agree, recursion was a bit difficult to wrap our heads around; we had never traced anything like a recursive function. But through course readings, lecture examples, and hands-on work in the labs and assignment, I feel that I am now much more adjusted to the concept, and can use it to my advantage in the future.

def non_list(L):

'''(list) -> int

Return number of items in list that are not lists

>>>non_list([1,'a',[[3],[]]])

3

'''

count = 0

for i in L:

if isinstance(i,list):

count += non_list(i)

else:

count += 1

return count

In this example we are iterating over items of a list. In the even the item found is another list, we are able to call the same function, non_list, on the new list. This process repeats for all nested instances of lists, only counting non-list items. The recursive application in this function is how we are able to call non_list from inside itself, to solve the same problem for the inner lists. It is very useful in solving a set of similar problems, however I have not learned any of the applications outside of that yet (if they exist). It seems like recursion is restricted in what it can and cannot do, as to call itself it seemingly must be a similar problem.

That being said we have definitely taken advantage of recursion in 148. It has been used our first assignment, to assess and analyze trees, as well as in our lab of linkedlists. It was extremely advantageous and reduced the time needed to solve problems like those, so I can definitely see why it was learned. Initially, and from reading slogs I can see others often agree, recursion was a bit difficult to wrap our heads around; we had never traced anything like a recursive function. But through course readings, lecture examples, and hands-on work in the labs and assignment, I feel that I am now much more adjusted to the concept, and can use it to my advantage in the future.

Week 6

This weeks' series of lectures was based are trees, which can be used as a visual representation of data.

.

.

The parts of this tree, called nodes, can be described with more specific terms;

The node that serves as the spawning point for other nodes is called the root. ie: 8

A leaf is describe as a node with no children; it is the end of the path. ie: 1,4,7

The 'middle' nodes are internal nodes, such as 3,6, and 10.

Trees can be analyzed through another subject we have learned; recursion. As an example, lets say we want to count the number of nodes in this tree. We can create a count function such that

In this example we use recursion to access and count the nested lists (aka the children) of the tree. We are able to count all the nodes using this method. The reason recursion is so useful is because a tree often presents multiple paths leading to multiple ends. Through recursion however we can account for all these paths, and come to conclusions based on the whole picture.

.

.The parts of this tree, called nodes, can be described with more specific terms;

The node that serves as the spawning point for other nodes is called the root. ie: 8

A leaf is describe as a node with no children; it is the end of the path. ie: 1,4,7

The 'middle' nodes are internal nodes, such as 3,6, and 10.

Trees can be analyzed through another subject we have learned; recursion. As an example, lets say we want to count the number of nodes in this tree. We can create a count function such that

def count(t: Tree) -> int:

"""How many nodes in this Tree?

>>> tn2 = Tree(2, [Tree(4), Tree(4.5), Tree(5), Tree(5.75)])

>>> tn3 = Tree(3, [Tree(6), Tree(7)])

>>> tn1 = Tree(1, [tn2, tn3])

>>> count(tn1)

9

return 1+ sum([count(c) for c in t.children])

Subscribe to:

Posts (Atom)